-

Engineering

How Grab is accelerating growth with real-time personalization using Customer Data Platform scenarios

Grab’s Customer Data Platform (CDP) introduces Scenarios, enabling real-time personalization at scale. By leveraging event triggers, geo-fencing, historical data, and predictive models, Grab delivers dynamic user experiences like mall offers, traveler recommendations, and ad retargeting. Proven results include more than a 3% uplift in conversions, driving growth across Southeast Asia.

-

Engineering · Data

A Decade of Defense: Celebrating Grab's 10th Year Bug Bounty Program

Discover how Grab has championed cybersecurity for a decade with its Bug Bounty Program. This article delves into the milestones, insights, and the collaborative efforts that have fortified Grab's defenses, ensuring a secure and reliable platform for millions.

-

Engineering · Data

Real-time data quality monitoring: Kafka stream contracts with syntactic and semantic test

Discover how Grab's Coban Platform revolutionizes real-time data quality monitoring for Kafka streams. Learn how syntactic and semantic tests empower stream users to ensure reliable data, prevent cascading errors, and accelerate AI-driven innovation.

-

Engineering · Data

SpellVault’s evolution: Beyond LLM apps, towards the agentic future

Discover SpellVault’s evolution from its early RAG-based foundations and plugin ecosystem to its transformation into a tool-driven, agentic framework that empowers users to build AI agents that are powerful, flexible, and future-ready.

-

Engineering

Grab's Mac Cloud Exit supercharges macOS CI/CD

Discover how our transition from cloud-based Mac hardware infrastructure to a colocation cluster within Southeast Asia has revolutionized our macOS CI/CD, enhancing performance and reducing costs.

-

Engineering · Data

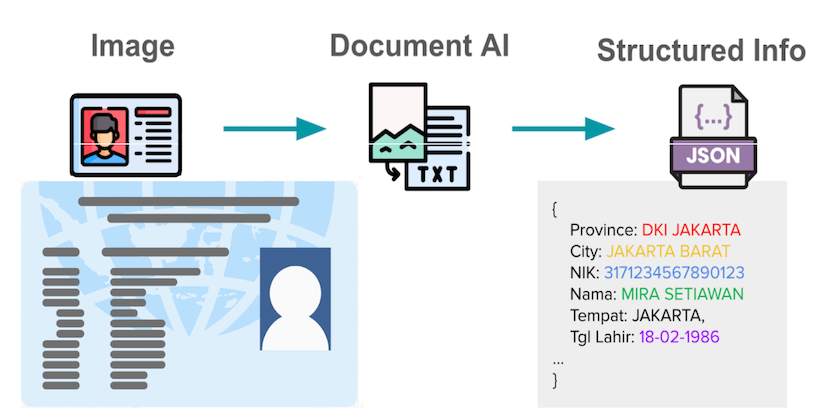

How we built a custom vision LLM to improve document processing at Grab

e-KYC faces challenges with unstandardized document formats and local SEA languages. Existing LLMs lack sufficient SEA language support. We trained a Vision LLM from scratch, modifying open-source models to be 50% faster while maintaining accuracy. These models now serve live production traffic across Grab's ecosystem for merchant, driver, and user onboarding.

-

Engineering · Data

Machine-learning predictive autoscaling for Flink

Explore how Grab uses machine learning to perform predictive scaling on our data processing workloads.

Demystifying user journeys: Revolutionizing troubleshooting with auto tracking

In the dynamic realm of mobile development, understanding user journeys is key to effective troubleshooting. This blog delves into how Grab's innovative AutoTrack SDK has revolutionized session tracking. By addressing the challenges of incomplete user journey data, Grab has significantly reduced downtime, boosted customer satisfaction, and enhanced developer efficiency.